I am currently a Senior Undergraduate student majoring in Computer Science and Technology at College of Intelligence and Computing, Tianjin University. I am interested in Machine Learning, Computer Vision, and Robotics. I will join the New Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences to pursue my PhD in the fall of 2025, supervised by Ap. Lue Fan Prof. Zhaoxiang Zhang.

🔥 News

- 2025.03: 🎉🎉 I joined Li Auto as an intern, and the research content was 4D feed forward reconstruction of driving scene.

- 2025.03: 🎉🎉 My first paper “Controllable Continual Test-Time Adaptation” has been accepted by ICME25, and I welcome everyone to discuss it. The paper can be accessed on arXiv, and the code is available on GitHub.

📝 Publications

Controllable Continual Test-Time Adaptation

Ziqi Shi,Fan Lyu,Ye Liu,…,Wei Feng,Zhang Zhang,Liang Wang

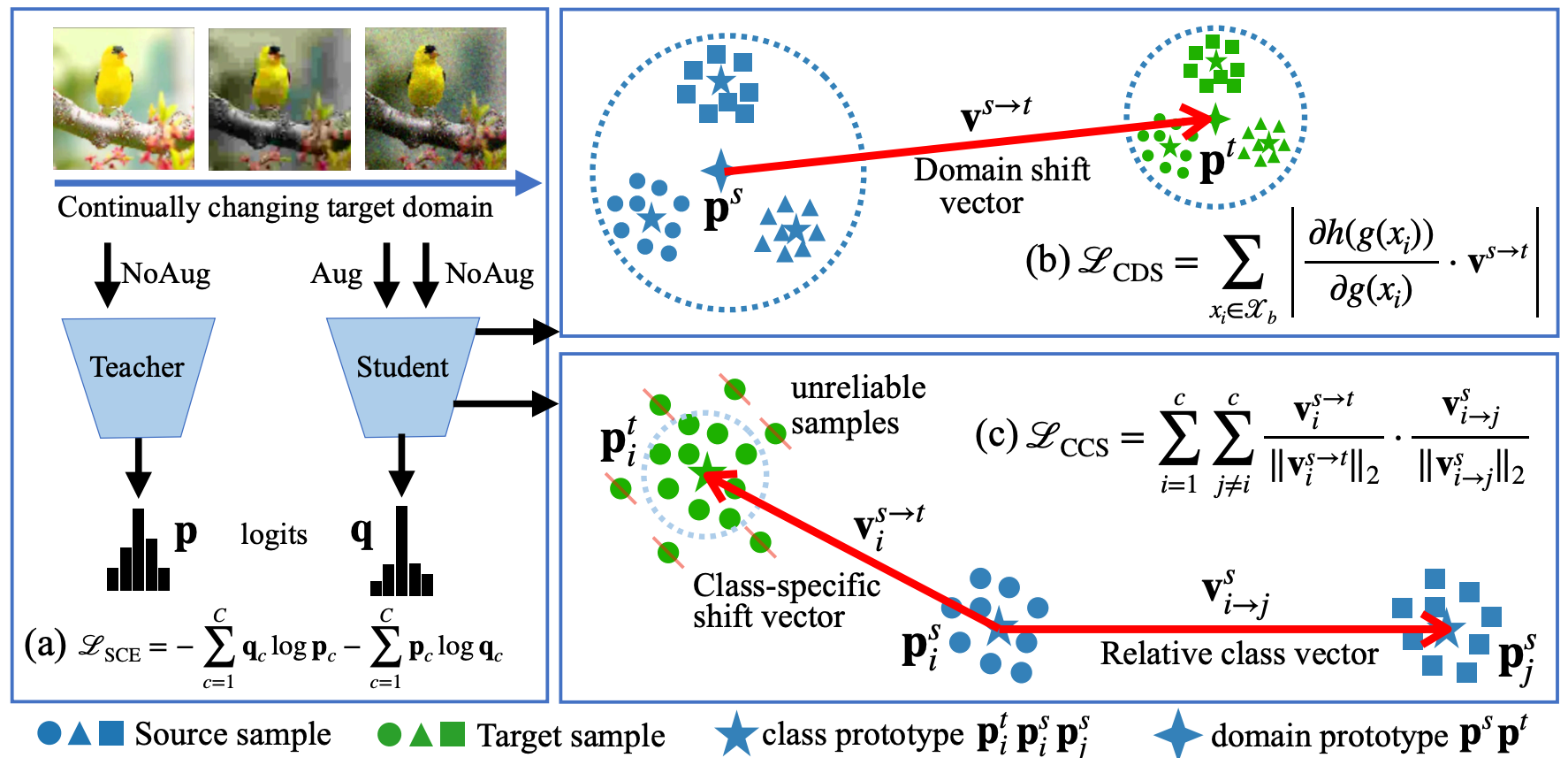

We propose $\textbf{C}$ontrollable $\textbf{Co}$ntinual $\textbf{T}$est-$\textbf{T}$ime $\textbf{A}$daptation (C-CoTTA), which explicitly prevents any single category from encroaching on others, thereby mitigating the mutual influence between categories caused by uncontrollable shifts. Moreover, our method reduces the sensitivity of model to domain transformations, thereby minimizing the magnitude of category shifts.

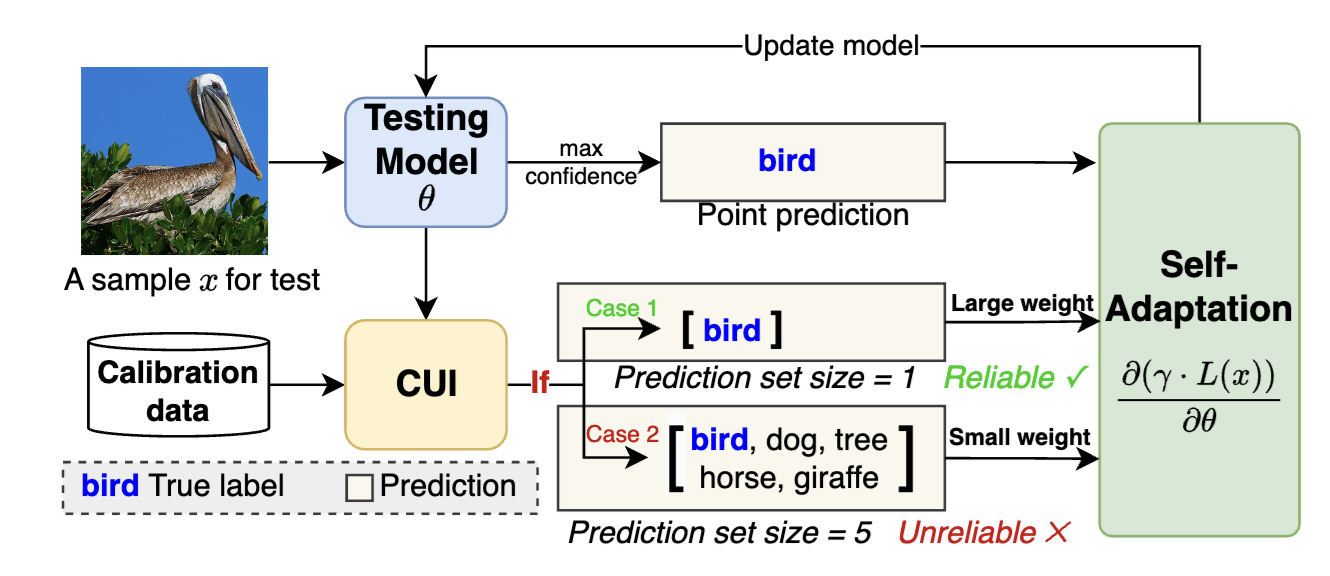

Conformal Uncertainty Indicator for Continual Test-Time Adaptation

Fan Lyu, Hanyu Zhao, Ziqi Shi,…, Zhang Zhang, Liang Wang

we propose a Conformal Uncertainty Indicator (CUI) for CTTA, leveraging Conformal Prediction (CP) to generate prediction sets that include the true label with a specified coverage probability. Since domain shifts can lower the coverage than expected, making CP unreliable, we dynamically compensate for the coverage by measuring both domain and data differences. Reliable pseudo-labels from CP are then selectively utilized to enhance adaptation.

📝 Projects

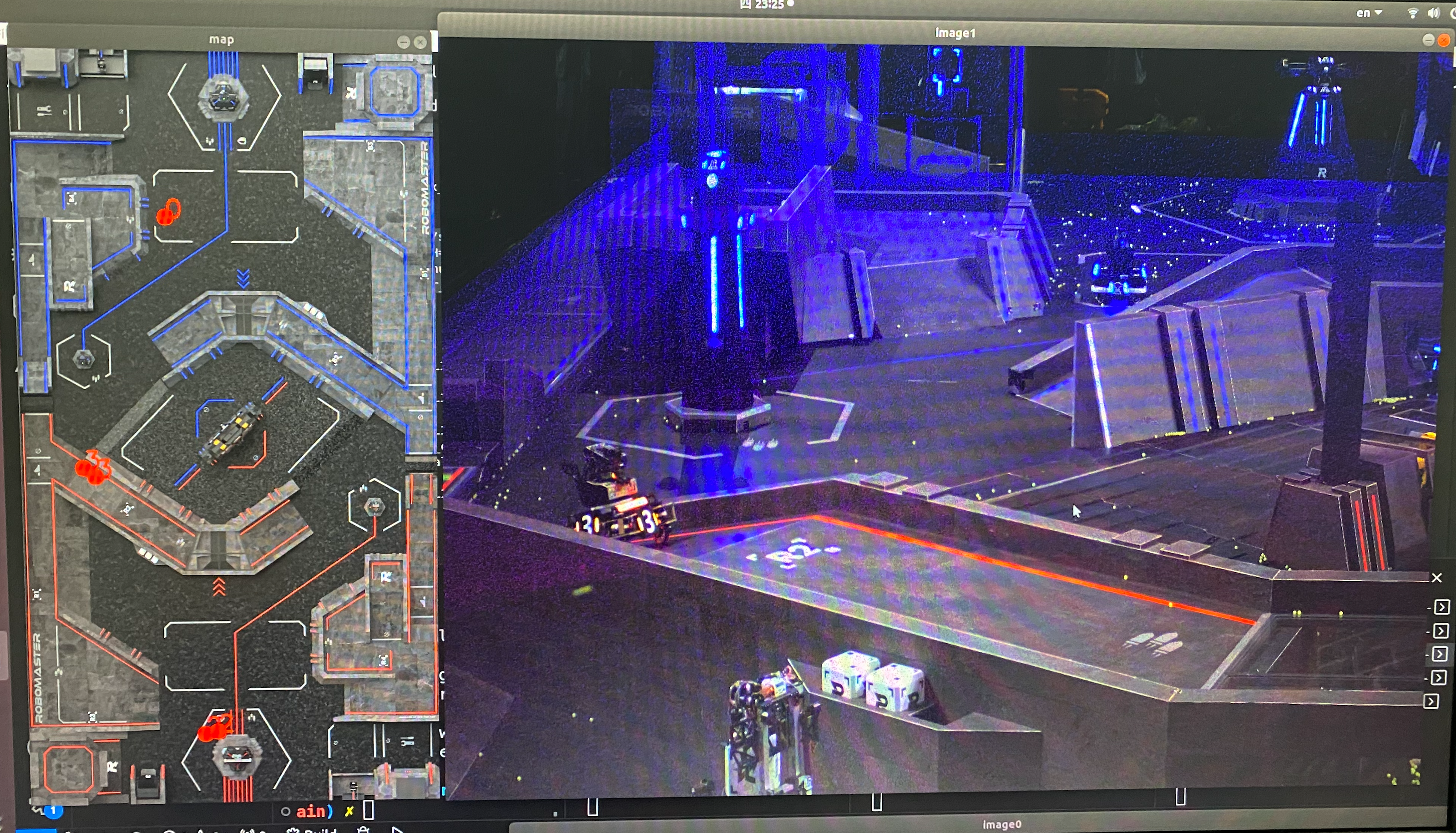

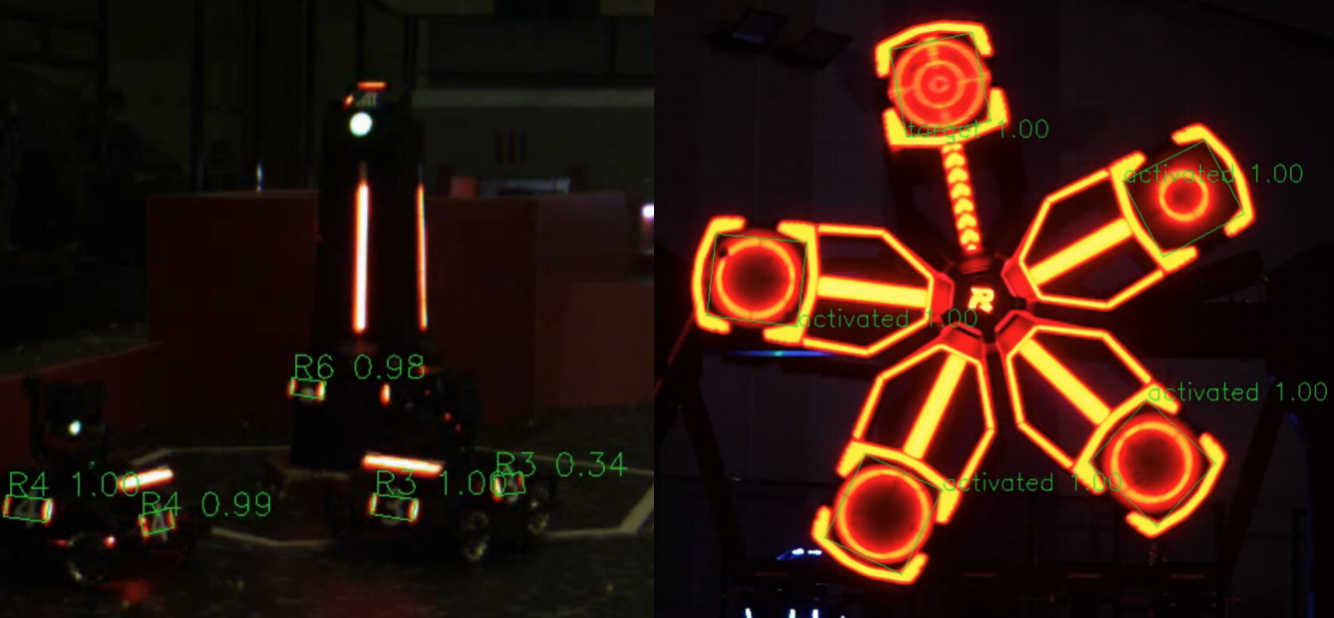

TJURM-Radar

This project implements the radar station robot functionality in Robomaster competitions using two approaches: monocular RGB and RGB + LiDAR fusion. Both methods employ 4K images captured by industrial RGB cameras as direct inputs for target detection models to identify and locate all robots on the field. In the monocular RGB approach, the camera’s extrinsic parameters are calibrated using large-scale PnP to establish the relationship between the camera and field coordinate systems. Depth information is then obtained through 3D structure rendering of the field. For the RGB + LiDAR fusion approach, the robot’s onboard LiDAR provides more precise depth information for targets, enhancing localization accuracy. This integration leverages the complementary strengths of RGB cameras and LiDAR, where RGB images offer rich texture and color information, while LiDAR supplies accurate depth data.

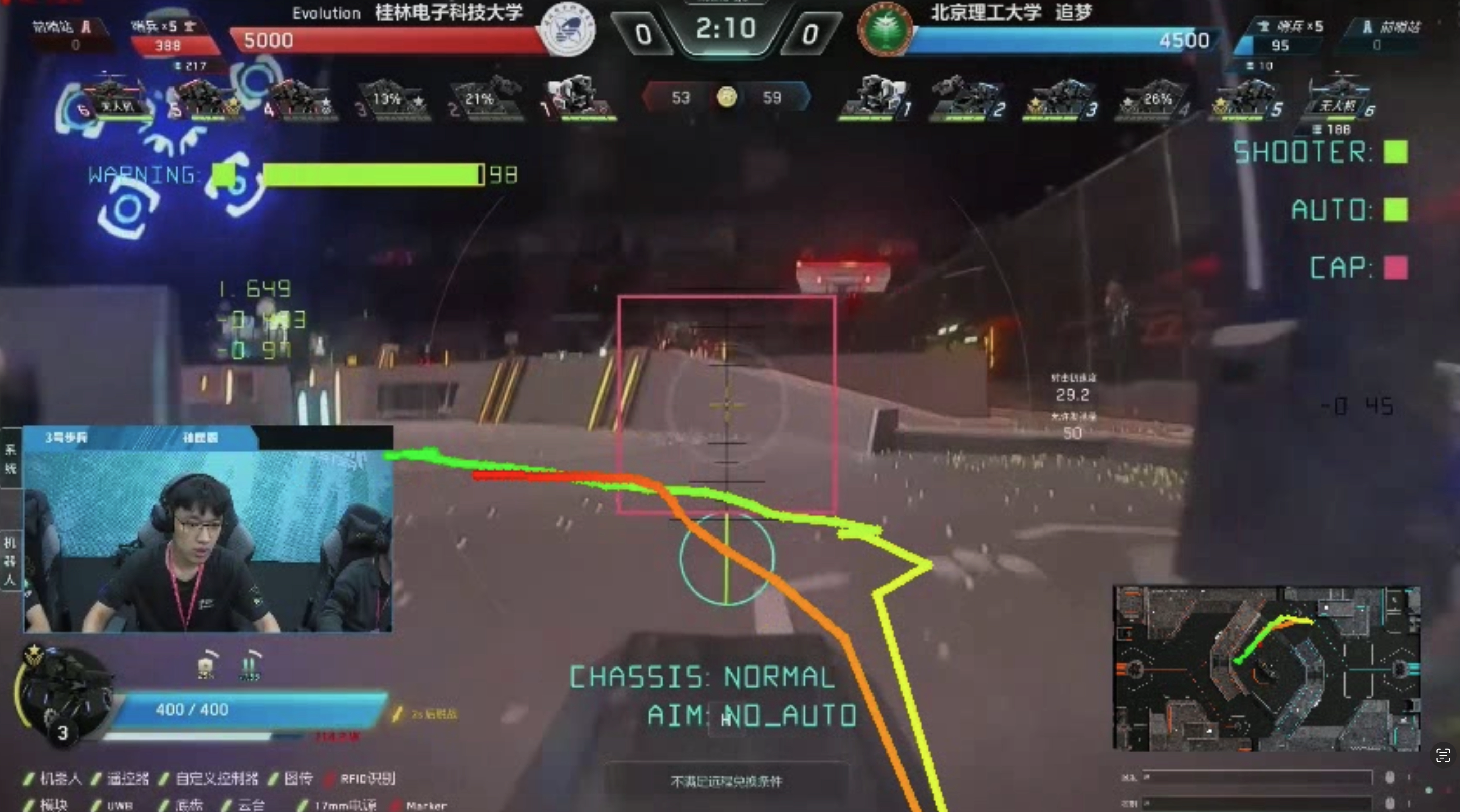

TJURM-AutoDrive

This project leverages a large collection of first-person videos from Robomaster competitions to extract the movement trajectories of robots within the mini-map using traditional computer vision algorithms. These trajectories and videos form the basis of an autonomous driving dataset. By extracting image features through common backbone networks and employing GRU for trajectory prediction, the autonomous driving model in the Robomaster context goes beyond navigation and obstacle avoidance. It also incorporates trajectory planning (deciding its own route, unlike in traditional cars where this is determined by road planning algorithms) and autonomous decision-making (choosing where to go, unlike in traditional cars where this is specified by the driver). These three capabilities correspond to increasingly distant prediction horizons. (The project is currently in active development, and interested parties are welcome to get in touch.)

TJURM-ArmorDiffuse

This project addresses the challenges of limited datasets and high annotation costs in Robomaster competitions by leveraging existing data and employing diffusion models to augment the dataset. Specifically, we designed a keypoint-conditional generation model to produce datasets that inherently contain the labels required for training detection models. Unlike existing approaches such as ControlNet, which inefficiently render keypoints as images, we innovatively use vector inputs directly as conditions. Extensive experiments have validated the effectiveness of our conditional generation approach.

TJURM-Detector

The customized key point detection model for Robomaster competition, modified from Yolov5, supports the key point detection of armor plate and energy mechanism, and provides the coordinates of points in the image coordinate system for the subsequent PnP positioning algorithm. Deploy to the Jetson Xavier NX edge computing device to accelerate inference using TensorRT while using knowledge distillation to achieve lossless compression of the model for faster inference.

📖 Educations

- 2022.09 - Present, College of Intelligence and Computing, Tianjin University

💻 Internships

- 2025, Li Auto.

- 2024, New Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences.

- 2023, Natural Language Processing Group, Department of Computer Science and Technology, Nanjing University.

- 2024, Visual Intelligence Lab, Tianjin University.

- 2024, Lab of Machine Learning and Data Mining, Tianjin University.

📖 Service

- 2024.08 - 2025.02, captain of the Peiyang Robot Team, Tianjin University.

- Reviewer: ICLR25、ICME25.